The AI paradigm of knowing and its problems

By Thomas B. Fowler ( bio - articles - email ) | Jun 21, 2024

[Part 2 of Technology and the limitations of artificial intelligence]

Why does AI fail so spectacularly? To answer this question, we must look at how computers operate, and how that limits what they can do. In order to do things that manifest “intelligence”, any AI system has to be based on a paradigm of knowing. That is, how does the computer “know” about its task or subject?

Free eBook:

|

| Free eBook: Liturgical Year 2024-2025, Vol. 5 |

This is something about which AI engineers and theorists do not think, because there isn’t much choice. AI is perforce based on algorithmic processes that utilize inputs (data or sensors) and output instructions or text. Essentially, modern AI is based squarely on ideas that stem from the British empiricist tradition, in particular the philosophy of David Hume (1711-1776). There are three key elements: the division of functions among “components”, the type of report sent to the mind by the senses, and a nominalist view of the process. To see why this is the case, we must first briefly examine Hume’s ideas and then the basic architecture employed in nearly all modern system engineering, including several varieties of AI.

AI and Hume’s Theory of Knowing

Hume envisioned the body as a composite of discrete physical systems, with the senses sending their report to the “mind”, which then worked on these reports. These “reports” he termed “impressions”, which give rise to “ideas”:

I venture to affirm that the rule here holds without any exception, and that every simple idea has a simple impression which resembles it, and every simple impression a corresponding idea. (David Hume, Treatise of Human Understanding, p. 3)

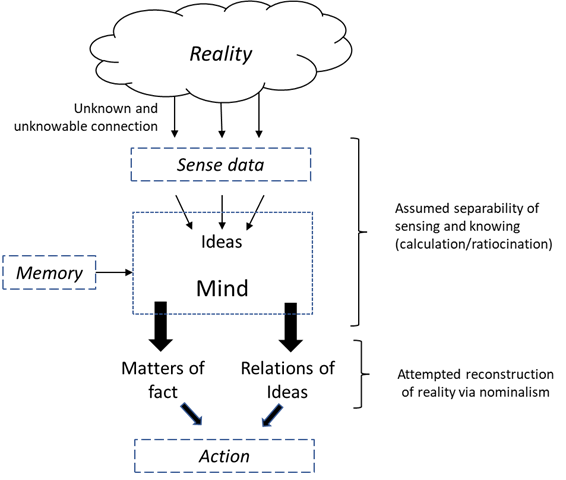

For Hume, knowledge is either “relations of ideas” or “matters of fact”. The “relations of ideas” are what we see with any kind of logical or mathematical inference, including mathematical theorems, for example, as in geometry, or other cases where logical arguments can be used, as in syllogistic reasoning. Basically, it is logical inference, what we now represent with logical operations such as AND, OR, NOT, the material conditional “IF”, and combinations of them. On the other hand, “matters of fact” are what Hume takes to be empirically grounded facts, such as scientific laws. Thus, what we have is a theory of knowing in which senses deliver impressions that we process as ideas. Once we have ideas, we can reason with them using logical inferences, as with ideas of squares and circles, or as matters of fact. General ideas are nothing more than particular representations, connected to a certain term. This quickly leads to Nominalism—the rejection of abstract or universal ideas in favor of specific individuals. Hume recognizes that we have, in our mind, such universal ideas. But they are just labels, not something that points to a reality. He rejects the ancient opinion that there exist universals in themselves: The basic structure is shown diagrammatically in Figure 1, with that of a typical AI system in Figure 2.

The limitations of Hume’s theory are well-known. Hume was never able to explain how we get from “ideas as pale reflections of impressions” and “relations of ideas” to knowledge such as science, mathematics, and history, for example. What sort of impression corresponds to Einstein’s field equations for General Relativity? Hume could not explain how we can recognize something in a position different from that in which we have ever seen it, e.g., a traffic cone (something trivial for us). He could not explain the meaning of sentences that relate abstract objects, e.g., “Beethoven’s Fifth is a great symphony,” or sentences that involve counterfactuals, e.g., “Beethoven’s Tenth would have been a great symphony”. Hume could not deal with the fact that we actually do perceive reality, e.g., other people as humans, and act on it every day.

|

|

Some AI Implementations

We consider three representative implementations of AI to illustrate its relationship to Hume’s theory of knowing, and then some of the significant problems that pertain to that theory and hence to AI.

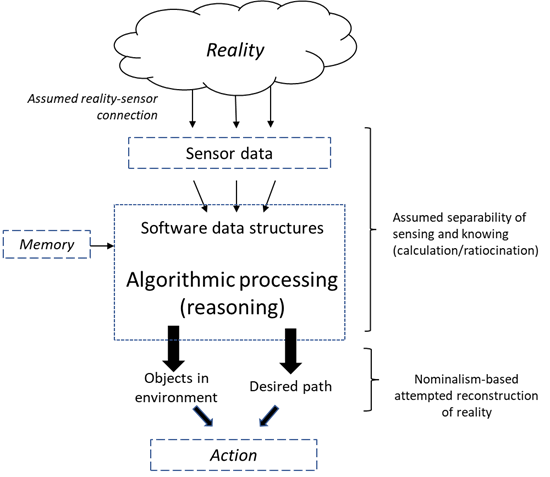

AI and Robotic Systems

These systems, which include robots and self-driving cars, utilize the modular design concept, with separability of functions. Sensors send reports to a central processor, which utilizes algorithms to do calculations on the sensor data and then instruct mechanical parts to carry out operations. They take Hume’s ideas of separability of sensing and knowing at face value, and develop systems that emulate them, based on the standard engineering practice of isolating system functions which includes the separability of environmental sensors and processing. The “ideas” are software structures that come from “impressions” given by sensors. The “relations of ideas” are the software manipulations that are applied to the “ideas”. The “feelings” are the beliefs or prejudices of the programmers, and causality as constant conjunction becomes statistical measures gleaned from iterations of the programs. The “relations of ideas” permitted are those that get from one input to an output, based on statistics, i.e., “feelings”. Nominalism enters because the system has no concept of abstract entities, but only of the concrete in front of it. See Table 1 for a summary.

| Area | Hume | AI |

| Perception | Sense give direct impressions | Sensors deliver raw data |

| Ideas | Pale, Lifeless copies of impressions | Raw data stored in memory as data structure> |

| Logical inference | Relations of ideas | Logic in programs |

| Knowledge of world | Matters of fact | Manipulation of data structure |

| Derivation of ideas | Impressions | Data from sensors |

| Principles of knowledge | Feelings | Programmer’s choice |

| Causality | Constant conjunction | Statistical inference |

| Complexity | Complex ideas composed of simple ideas | Hierarchical data structure |

| Nominalism | Only concrete entities and collections | Only can recognize names of abstract entities |

| Table 1. Parallels between Hume’s epistemology and AI paradigm of knowing | ||

Generative AI

While Generative AI is not structured in exactly the same way as AI applications such as robotics, it shares many similarities, including nominalism. AI scans large collections of works utilizing key words and phrases, takes the results and assembles them, based on frequency, into a report, following rules of grammar and knowledge of word order frequencies, but without knowledge of the abstract entities and ideas involved. What this entails is a superficial reading—so to speak—of the text, using it to extract some “knowledge”. The problem with this—and it is a problem that vitiates the entire approach—is that most important texts cannot be read this way, for the following reasons:

- For many works, especially literature and philosophy, the message or theme requires a holistic understanding of the entire text. The text as a whole conveys the message, not a particular piece or excerpt of it.

- The message or theme may be different than the text narrative. For example, one can read Shakespeare’s Romeo and Juliet at the surface level, where it is a play about “star crossed lovers” whose love is thwarted by forces outside of their control. But the real message of the play is different, namely, it shows the dangers of vendettas.

- The meaning of a work may depend on the reader’s personal experience. Especially with poetry, this is the case.

- Many texts have multiple levels of meaning. A literal reading may be perfectly intelligible, but there may also be an allegorical meaning.

- The real meaning of a text may be the exact opposite of what the words say. This is common in satirical and humorous writings.

- Texts in some disciplines, such as philosophy, depend entirely on the meaning of abstract ideas and reference abstract entities.

Only in some cases is the literal meaning of a text the principle meaning, such as in scientific writing and most historical writings, and even in those cases, there is abundant reference to abstract entities. Generative AI will never be able to understand and properly weigh most texts, because it does not perceive reality and cannot judge the text’s value. To do that, a reader must be able to read and understand the entire text (including very abstract ideas and what they entail or imply), take into consideration the writer’s goal, presuppositions, and biases, and then relate the work to others to ascertain its thoroughness, accuracy, and contribution value.

Neural networks

Consider now another well-known instance of AI, neural networks, which some consider to be the correct way to make machines “think” in a manner similar to that of the human brain. Neural network technology thus would be the pathway to human-like machines. But is this really what they do? Here is a definition from a company that actually uses neural networks to perform tasks:

Neural networks are a set of algorithms, modeled loosely after the human brain, that are designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling or clustering raw input. The patterns they recognize are numerical, contained in vectors, into which all real-world data, be it images, sound, text or time series, must be translated. (“A Beginner’s Guide to Neural Networks and Deep Learning”, skymind.ai).

Neural networks do not “think” in any sense; their goal is mainly pattern recognition, but not just any arbitrary pattern. Commonly they “classify data when they have a labeled dataset to train on,” called “supervised learning”. The goal is a functional relationship of the general form y = f(x) that expresses a correlation between input x and an output y, allowing predictions, akin to regression analysis. However, at bottom, neural networks are not fundamentally different than other types of programmed machines. Geoge Gilder observes that though niche applications are important, e.g., recognizing faces, or interpreting speech, they are not the long-sought nirvana of general AI:

AI is just another advance in computer technology, like the other ones. It is not creating rivals for the human brain…. To observers of such trends, it is easy to imagine a future in which the role of humans steadily shrinks….The basic problem with these ideas [of AI] is their misunderstanding of what computers do. Computers shuffle symbols.. (“A Beginner’s Guide to Neural Networks and Deep Learning”, skymind.ai)

What is the conclusion? That the neural networks operate differently than human intelligence, and only mimic it in ways that are very fragile:

…never fall into the trap of believing that neural networks understand the task they perform—they don’t…. They were trained on a different, far narrower task than the one we wanted to teach them: that of merely mapping training inputs to training targets, point by point. Show them anything that deviates from their training data, and they will break in the most absurd ways. ( blog.keras.io/the-limitations-of-deep-learning.html)

Symbolic manipulation programs

Symbol manipulation programs, in the form of applications that can do symbolic mathematics (as opposed to numerical calculation) have been around for many decades. Most famous is Wolfram’s Mathematica ® (1988). Obviously, these programs predate most of what today is termed “AI.” Such programs have a remarkable ability to draw on a large database of algorithms to solve problems. In that sense, they give the impression of ‘knowing” mathematics; and unlike the chatbots, they don’t “make up answers”. But as any user will tell you, they don’t “understand” mathematics, and the proof is that they are not valuable to someone who doesn’t already understand the mathematics involved in a problem.

Previous in series: AI, its capabilities and threats (Part 1 of Technology and the limitations of artificial intelligence)

Next in series: The human paradigm of knowing: How it is different and why it is not replicable with AI (Part 3 of Technology and the limitations of artificial intelligence)

All comments are moderated. To lighten our editing burden, only current donors are allowed to Sound Off. If you are a current donor, log in to see the comment form; otherwise please support our work, and Sound Off!

-

Posted by: ifomis2828 -

Jun. 23, 2024 12:39 PM ET USA

Your account of the parallelism between Hume's epistemology and the way AI works is impressive. However, I think there is a dimension that you neglect (also in Part 1 of this essay), which is the role of the human will. AI will not be able to replace (most) human jobs not only because humans possess creativity, but also because humans possess a will (volition). More (perhaps too much) in https://buffalo.app.box.com/v/AI-Without-Fear.