AI, its capabilities and threats

By Thomas B. Fowler ( bio - articles - email ) | Jun 05, 2024

[Part 1 of Technology and the limitations of artificial intelligence]

Overview

Artificial Intelligence (AI) has become a controversial and disturbing subject today. There are many contributing factors to this lamentable state of affairs, but unfounded and erroneous claims made for Artificial Intelligence greatly exacerbate the situation. AI has become a catch-all phase that sums up belief in the power of machines, both now and in the future. The thrust is that computers can now do many things formerly reserved to humans alone, thus duplicating human intelligence, with much greater capabilities in the future, allowing AI systems to “take over”.

But what is AI? AI is the category of systems that utilize computers, feedback, rule-based logical inference (deterministic or statistical), complex data structures, large databases and a particular paradigm of knowing to extract information and patterns from data and apply it to control, make decisions or answer queries. The kinds of technology that typically fall under the rubric of “Artificial Intelligence” include:

- Robots and robotic systems

- Neural networks and pattern recognition

- Generative AI, including ChatGPT and similar applications using Large Language Model

- Symbolic manipulation programs such as Mathematica®

- Autonomous cars and other autonomous systems

- Complex large-scale control programs

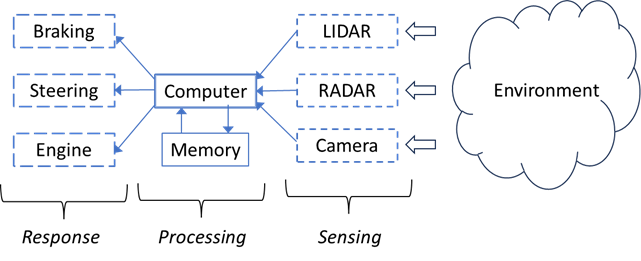

A schematic view of autonomous (self-driving) cars illustrates the main functional areas and operation of several classes of AI (Figure 1).

The car has sensors that detect shapes and movement. This information is sent to a computer, which has a database that allows it to extract information from the sensor data, then use it to determine a course of action. At no time does the AI system perceive the shapes and their movements as real, e.g., as real people; it simply is programmed to make decisions about what to do when certain shapes and movements are detected. This mimics but is completely different from what a human driver does.

AI is not some radically new technology, but an evolutionary development of existing technologies, with the same limitations, as we shall see. Because of the cachet associated with the name “AI”, many existing programs and processes have been re-branded as “AI”, regardless of whether they incorporate any significant new capabilities.

AI will be able to:

- Automate some jobs, and displace some workers (though at the same time creating new jobs)

- Supplement and assist human research and development activities

- Aid humans in many other fields and actions

- Extend current computer capabilities

AI will never be able to:

- Automate most jobs, since most require creative action on the part of the job holder

- “Take control” because it does not perceive reality

- Outstrip human knowledge of reality

AI fails to duplicate human knowing for three reasons:

- It has no conception of truth (or any abstract entity)

- It does not perceive reality (only surrogates such as shapes, movements, and existing texts)

- It has no capacity for truly creative thought (only algorithm-based combinations of existing texts or procedures)

Visions of AI “Taking over” and other threats

AI has been relentlessly hyped. Ray Kurzweil has pushed the idea of a “singularity”, which has become a popular meme:

We are entering a new era. I call it “the Singularity”. It’s a merger between human intelligence and machine intelligence that is going to create something bigger than itself. It’s the cutting edge of evolution on our planet…that is what human civilization is all about. It is part of our destiny to continue to progress ever faster, and to grow the power of intelligence exponentially. (Kurzweil)

In the minds of some, AI represents a very serious threat, one which requires immediate action to save humanity. Some aver that catastrophe is right around the corner. Eliezer Yudokowsky, a researcher at the Machine Intelligence Research Institute, warns that the death of humanity “is the obvious thing that would happen.” (Yudowsky)

AI is feared for another reason, viz. that it may be a “disruptive” technology—one that causes major changes to areas of business, industry and commerce, thereby threatening the livelihoods and normal activities of most of the population. The automobile and the PC are prime examples of disruptive technologies from the past. However, for a technology to be disruptive, it actually has to work. In the case of AI, if expectations are based on a false theory of knowing, it will not work as envisioned and its disruptive potential will be limited.

Numerous books have been written that deal with technological limitations of AI.. This is an important area of research and study, naturally, because it reveals application areas likely to benefit from AI, and others where technological limitations will constrain applications. Here we consider a different question, viz. whether the paradigm of knowing used in AI entails limitations that reveal the boundaries of AI, no matter how implemented and how fast the hardware. This is one of the most intriguing aspects of AI, because such a boundary implies a direct connection with philosophy. Indeed, once the discussion turns on issues such as sentience, “thinking”, and what constitutes “intelligence”, it leaves the realm of technology and enters that of philosophy—a situation that may not be comfortable to those immersed in technology, because philosophy operates at an entirely different plane of knowledge and understanding of the world.

Sentience and thinking immediately point to the question of whether the paradigm of knowing assumed for AI is that of human knowing, or in any way equivalent. The answer to this question will largely settle the issue of whether AI or any related technology can replace the important functions of human knowing—and thus humans—as opposed to simply enhancing these capabilities. As we shall see, while the varieties of AI utilize different algorithms and functional organization, they share certain common epistemological assumptions. These assumptions are never made explicit and likely most of those who labor in AI fields are completely unaware of them. They also share three other characteristics, which we shall discuss: no conception of truth, inability to perceive reality, and no capacity for truly creative thought.

The actual state of AI: How smart is it?

It is reasonable to ask whether, in 70 years, have computers become sentient? Comparing a 1950s mainframe computer to a supercomputer, the memory improvement factor is 6,250,000 and speed improvement factor is 29 trillion! While this means that supercomputers are capable of solving certain types of problems very rapidly, they aren’t sentient or anything close. The implication is that scaling of computer power will not yield the outcomes feared or lead to any sort of “singularity”.

ChatGPT and similar generative AI programs are widely implemented and are common in Internet browsers. They can take a user’s query and generate a human-sounding response. While this sounds exciting, the chatbots have an unenviable track record. Let us consider some of their gaffes.

Climate scientist Tony Heller asked ChatGPT a simple question about CO2 levels and corals and shellfish. The answer that came back was completely wrong (Heller) ChatGPT is also known to simply make up articles and bylines, something that has struck The Guardian, since these phony articles are attributed to it:

Huge amounts have been written about generative AI’s tendency to manufacture facts and events. But this specific wrinkle—the invention of sources—is particularly troubling for trusted news organizations and journalists whose inclusion adds legitimacy and weight to a persuasively written fantasy. (Futurism )

Google’s AI Overview recommends eating rocks, using glue topping on pizzas, and advises pregnant women to smoke two or three cigarettes per day. Chatbots are getting worse at doing even basic math, exemplified by their inability to reliably solve simple problems such as whether a given number is prime. In another case, a lawyer used a chatbot to research and write a legal brief. Unfortunately for the lawyer, the brief contained numerous “bogus legal decisions” and made-up quotes, leading to potential sanctions for the lawyer.

Obviously, if no one can trust citations and entire articles can be just made up, academic research, journalism and everything in our society that depends upon reliable knowledge will be undermined. The New York Times has explored this problem, which strikes at the heart of any notion of intelligence. The Times asked ChatGPT a question, “When did the New York Times first report on ‘artificial intelligence’?” The answer that came back used a non-existent article that the chatbot simply made up. The “inaccuracies” that emerge from Chatbots and other such programs are called “hallucinations” by those in the technology industry.

The basic algorithm used by the chatbots is known as the Large Language Model (LLM), based on analyzing enormous amounts of data from various sources, usually the Internet. The goal is to find patterns in the data, and then use the rules of English grammar and word frequencies to compose a response. The Times notes:

Because the internet is filled with untruthful information, the technology learns to repeat the same untruths. And sometimes the chatbots make things up. They produce new text, combining billions of patterns in unexpected ways. This means even if they learned solely from text that is accurate, they may still generate something that is not… And if you ask the same question twice, they can generate different text. (NY Times, italics added)

The root of the problem is the inability of the programs to perceive reality, or understand truth. Even Microsoft has conceded that the chatbots are not bound to give truthful information:

The new AI. systems are “built to be persuasive, not truthful,” an internal Microsoft document said. “This means that outputs can look very realistic but include statements that aren’t true.” (Ibid.)

Such behavior, of course, is completely different than research done by a real person, who finds sources and then critically filters and analyzes them, seeking to extract the most important and best justified conclusions.

Complexification and the Real Dangers Associated with AI

AI is a response to the complexification of our modern industrial/information society. The interconnectedness, specialization, and drive for efficiency combine to drive society to ever higher degrees of integration and complexity. Hence, hype around the potential dangers of AI, though understandable, is misplaced. The dangers arise not because the AI system wants to take over the world, or act like HAL from the motion picture 2001. Rather, the real danger lurks in the complexification of society. As computer-based systems are used to operate functions spanning more components of society, such as the power grid, the dangers associated with malfunction naturally increase:

- The system encounters a situation for which it was not programmed, and does something that leads to catastrophe.

- The system is hacked by a malicious actor, who causes it to malfunction.

- Interaction of the components of the overall system leads to unanticipated instabilities.

- An undiscovered programming bug causes the system to malfunction, with potentially catastrophic results.

- And perhaps most important, the societal cost of the AI systems will outstrip the value that they add.

It will always be necessary to keep people in the loop who can supply the connection with the real world that the systems lack.

Next in series: The AI paradigm of knowing and its problems (Part 2 of Technology and the limitations of artificial intelligence)

All comments are moderated. To lighten our editing burden, only current donors are allowed to Sound Off. If you are a current donor, log in to see the comment form; otherwise please support our work, and Sound Off!

-

Posted by: djpbrennan4960 -

Jun. 10, 2024 7:34 AM ET USA

For a fourth-year university course 45 years ago, I was assigned to do an essay on Artificial Intelligence. I still have two books, Joseph Weisenbaum's "Computer Power and Human Reason" and Hubert L. Dreyfus' "What Computers Can't Do". Weisenbaum's was the more philosophical of the two. I also recall Marvin Minsky's paper, The Articicial Intelligence of Hubert L Dreyfus: 45 years later and Minsky's cancel culture and optimism in unbuilt systems continues and prevails.